so. a little bit of background. a few years ago (as in like 5-6 years ago), this chinese company developed a mobile gacha game based off dengeki bunko fighting climax. they called it dengeki bunko: crossing void. the original game was a fighting game (shocker) that featured a bunch of characters from different dengeki bunko titles. the most important thing to know about this is that durarara is under dengeki bunko.

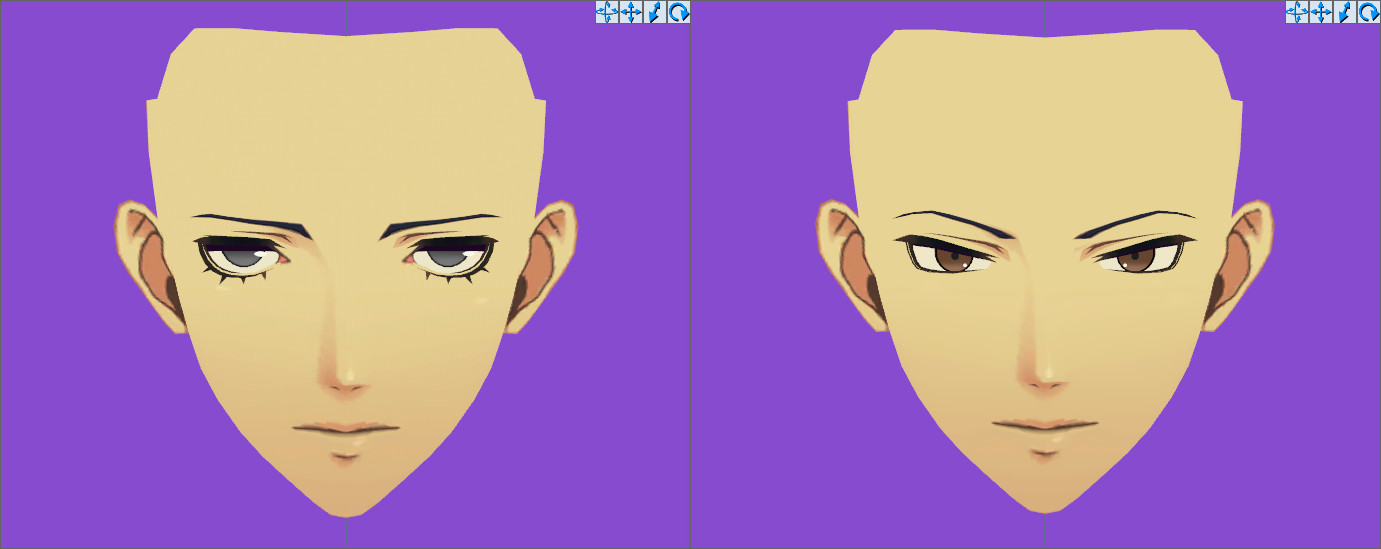

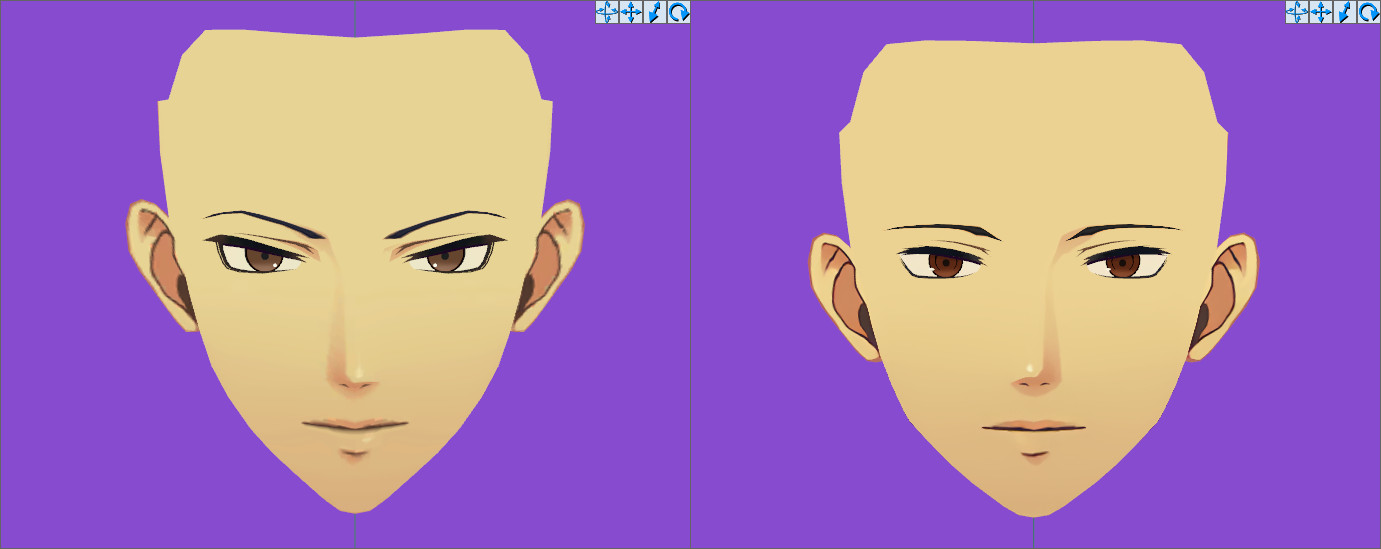

so anyways. it took a while for the global release to come out. i was particularly excited for this release because there were 2d animated models. isn't he so cute... 👨❤️💋👨👨❤️💋👨👨❤️💋👨

now keep in mind, at this point, i only knew of live2d. i did not know of any other engines. so i was like, 'wow i can't wait to just datamine the game files grab the .moc file and put it into this live2d viewer that i already have downloaded because live2d is a very easy program to deal with'

it was not live2d.

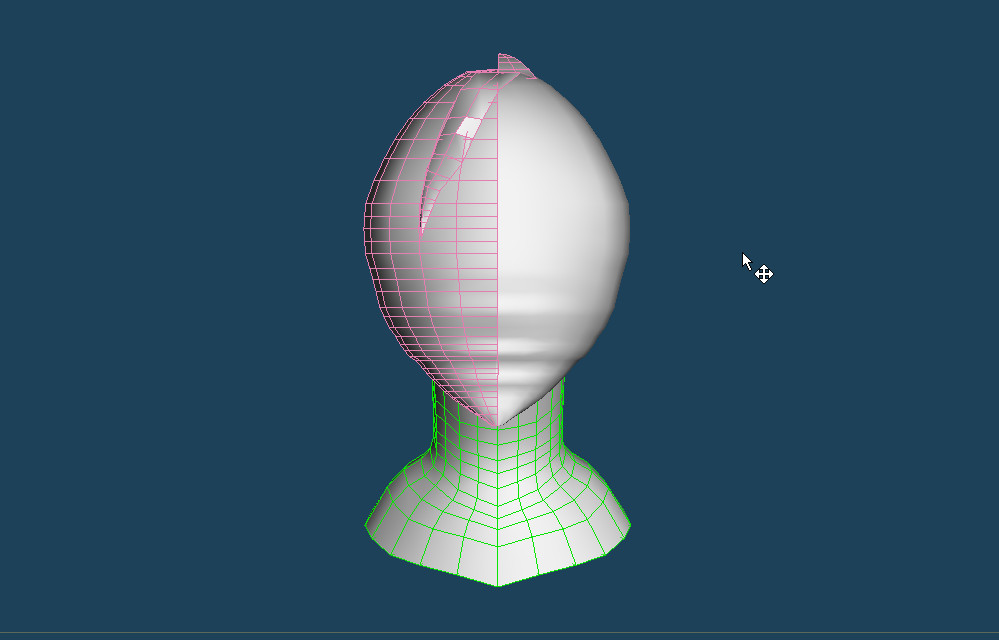

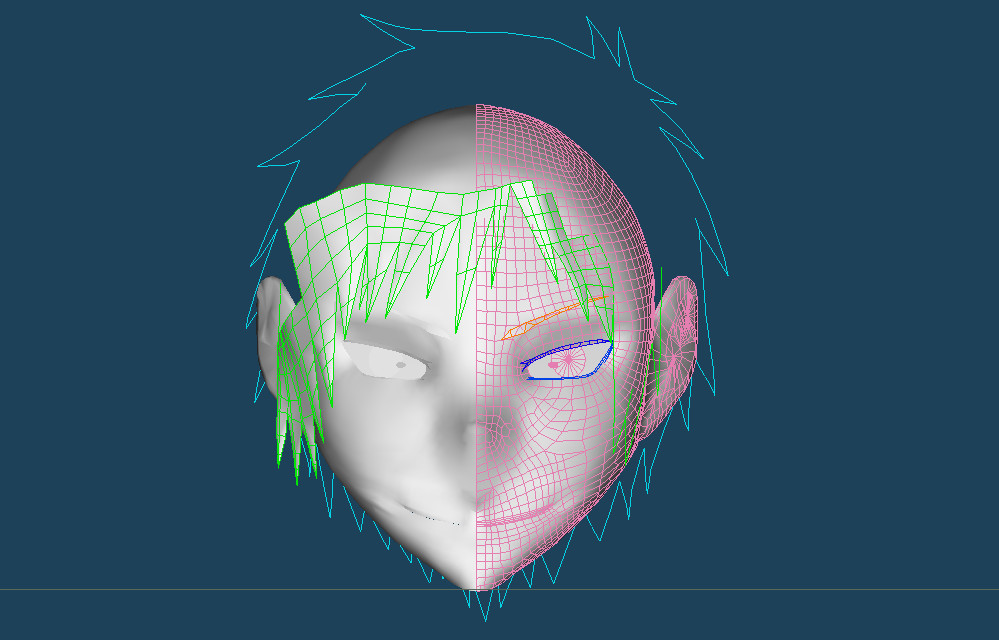

when i started looking in the files, i was super lost, because i couldn't find anything pertaining to live2d at all. what i did find, however, was a folder called 'emote'. it kept sticking out to me for some reason, so after a bit of googling (i at least knew the game engine was unity because it's fucking unity), i found a few programs to help me unpack the files and get the stuff from inside. so i did all that!

and then i ended up with the texture file for the model (this was huge for me) and a large file that had no file extension.

i opened it in a text editor to check out the header, because i was hoping that would give me a clue, and it did! it said 'psb'. but... i didn't know what a psb was. so i googled that, and the first results were about it being a photoshop file format. but that obviously wasn't the case here, so i had to dig a little more. that's when i learned about emofuri and the e-mote engine, and i was like, 'oh, so this game doesn't use live2d, and instead uses another engine that has virtually no support, and is likely going to make my life much harder than it needs to be.'

and it did! because even when i did finally stumble across an amazing github repo for e-mote models, i... got an error. my model wasn't supported. so i had to email the developer... which thankfully got it working and released an update that supported my model! he told me there was a unity sdk for e-mote, but if i didn't have much technical knowledge it wouldn't be worth it... he underestimated me.

within the hour, i had said sdk downloaded onto my computer and unity downloaded and my beautiful angel princess wife loaded into a new project. truthfully, the most difficult part was the stupid settings for whether or not i wanted to render based off the textures or whatever. but i digress. it took a bit more, but i finally installed a recorder that let me record the izaya model and export it as a video so that i could make it into a cute gif!

i'm simplifying the progress a lot here. it was really tedious work, and a lot of that was because i was so new to the whole e-mote engine and whatnot. that being said, i had a lot of fun with it all! i learned a lot.

you'd think it ends there, but i actually learned something new this year as well. in the process of remaking my about page, i decided i really wanted to put the izaya emote model on my page... but i couldn't figure out how to get the emofuri webgl sdk to work, so i decided i'd just export a little looping animation as a gif and put it on there and it'd be done.

well, my first problem came before that. i wanted the gif to be transparent, right? but you can't have a transparent bg in unity, so i had to export the video with a green bg, put it into vegas pro, and chroma key out the green... which was okay. and then i had to export it to a video to turn into a gif......that was where i ran into my next problem.

because mp4 does not support alpha channels! so i had to download quicktime and export it as a .mov file to get my alpha channel... and then i tried putting it into a .webp file, but it wouldn't work, so then i got really angry. mind you, this entire time, i was trying to gif it by using ezgif... which... was foolish of me. but i digress. i tried using webp for a moment, but then i couldnt figure out how to make the alpha channel work, so i gave up on that.

i ended up going with just exporting it as an image sequence and then putting it together using ffmpeg. it was a lot of trial and error. and a lot of me learning new command line parameters. i went between gif and apng, but gifs don't support partial transparency, so all the edges were ugly and jagged, so i went with the apng in the end.

and i'm really happy with it! he looks cute... it's almost 100mb, but i didn't want to settle on something ugly and compressed when it comes to izaya. so now you get to see him on my about page...!